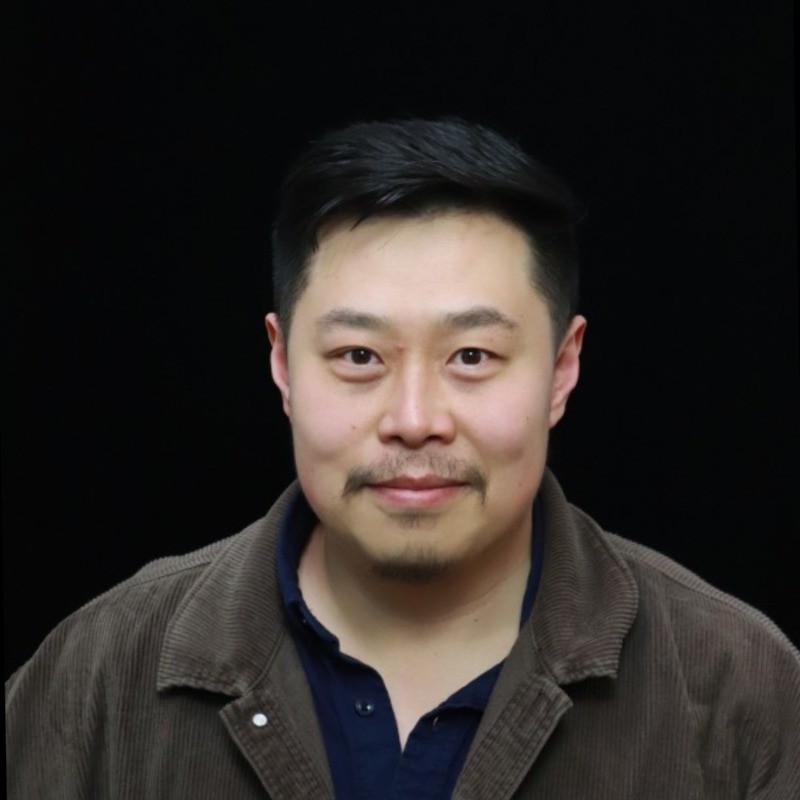

Kai Zhen

I'm a Sr. Applied Scientist at Amazon AGI building efficient AI systems. During my Ph.D. at Indiana University, I focused on neural speech and audio coding, receiving the Outstanding Research Award in 2021 from the Cognitive Science Program at IU. This research has evolved into speech and audio tokenization techniques now used in multi-modal LLMs. At Amazon Alexa, I was a key contributor to on-device ASR models, publishing and deploying advanced sub-8-bit quantization-aware training and 2:4 sparsity-aware finetuning for low-footprint, low-latency inference. This work echoes my Ph.D. studies: like compressing audio data, compressing models requires reducing bit depth and sample rate to leverage hierarchical GPU memory without sacrificing accuracy. Since Amazon's pivot to AGI, I've worked on LLM pre-training with distributed frameworks (NeMo/Megatron) and post-training inference optimization using TensorRT-LLM, where the same thematic paradigm of "robustness and efficiency" translates to better scaling capabilities. My recent work on LLM pruning (Wanda++) in collaboration with University of California, Santa Barbara was highlighted by Amazon Science. I've published as first / second author at Interspeech, ICASSP, IEEE SLT, IEEE Signal Processing Letters, IEEE T-ASLP (speech/audio coding and software-hardware co-design for on-device ASR), and at ACL and EMNLP (LLM efficiency).